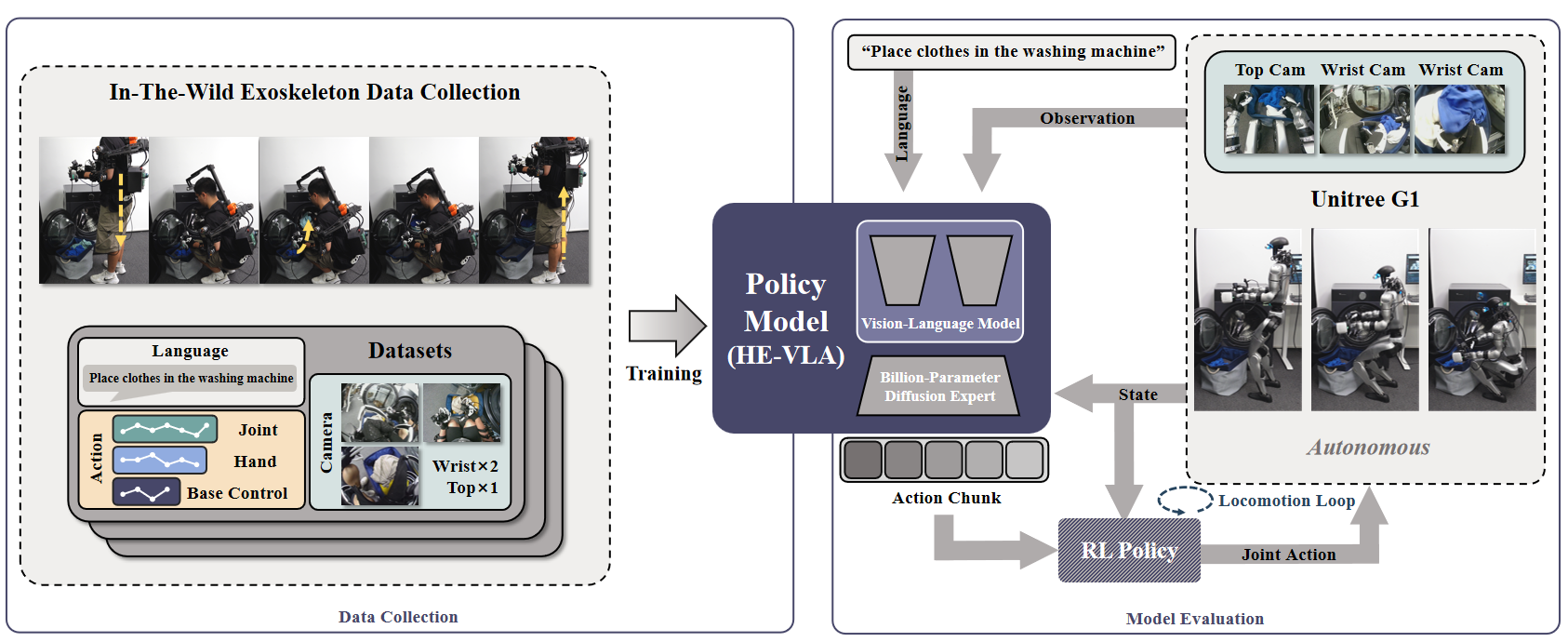

A significant bottleneck in humanoid policy learning is the acquisition of large-scale, diverse datasets, as collecting reliable real-world data remains both difficult and cost-prohibitive. To address this limitation, we introduce HumanoidExo, a novel system that transfers human motion to whole-body humanoid data. HumanoidExo offers a high-efficiency solution that minimizes the embodiment gap between the human demonstrator and the robot, thereby tackling the scarcity of whole-body humanoid data. By facilitating the collection of more voluminous and diverse datasets, our approach significantly enhances the performance of humanoid robots in dynamic, real-world scenarios. We evaluated our method across three challenging real-world tasks: table-top manipulation, manipulation integrated with stand-squat motions, and whole-body manipulation. Our results empirically demonstrate that HumanoidExo is a crucial addition to real-robot data, as it enables the humanoid policy to generalize to novel environments, learn complex whole-body control from only five real-robot demonstrations, and even acquire new skills (i.e., walking) solely from HumanoidExo data.

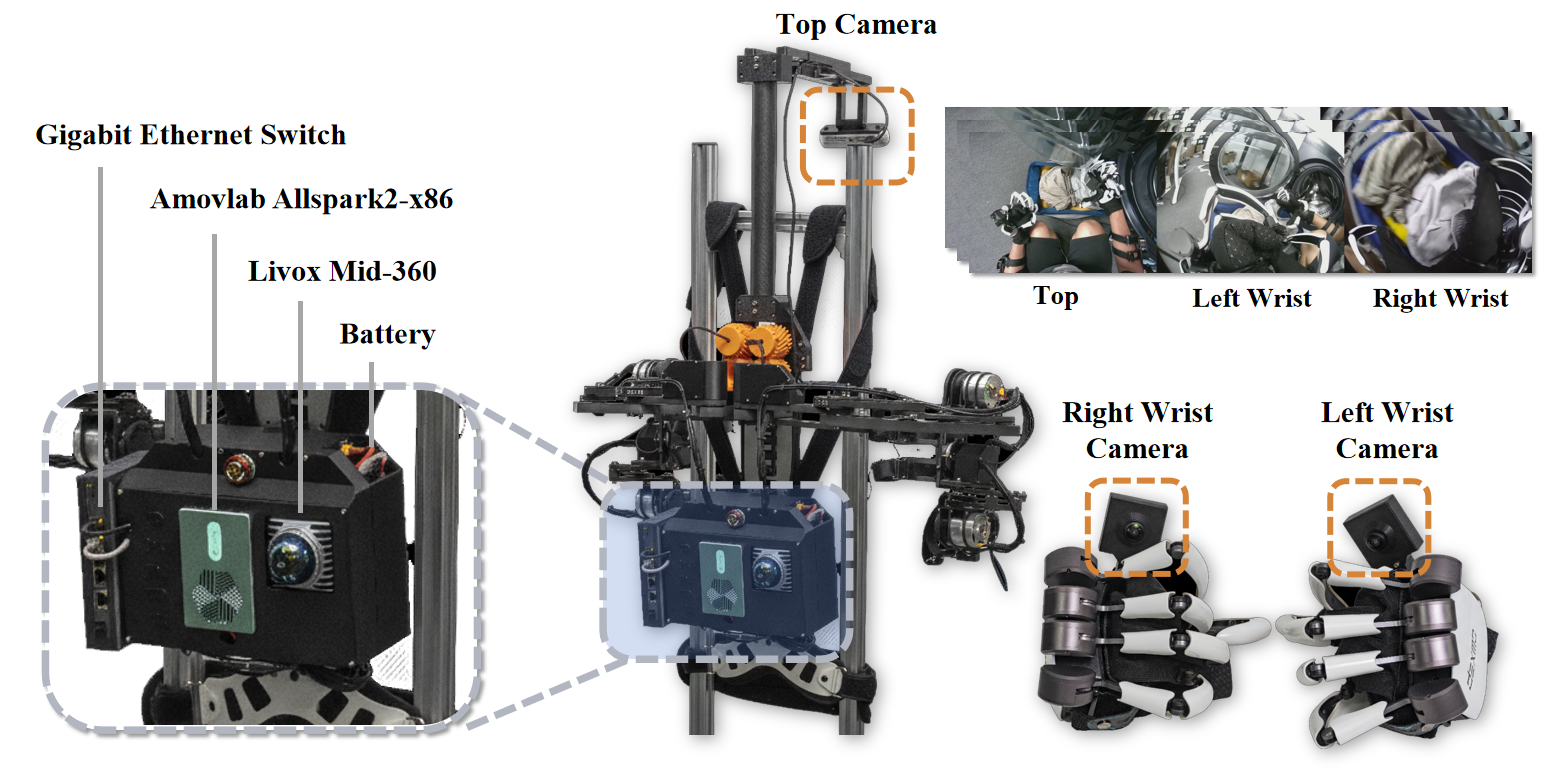

We integrated a Mid-360 LiDAR for acquiring exoskeleton motion odometry. For visual information acquisition, we added two wrist cameras to capture new operational perspectives and enrich environmental perception. These cameras, installed on the Dexmo force-feedback gloves, were mounted at angles identical to those of the robot's cameras.

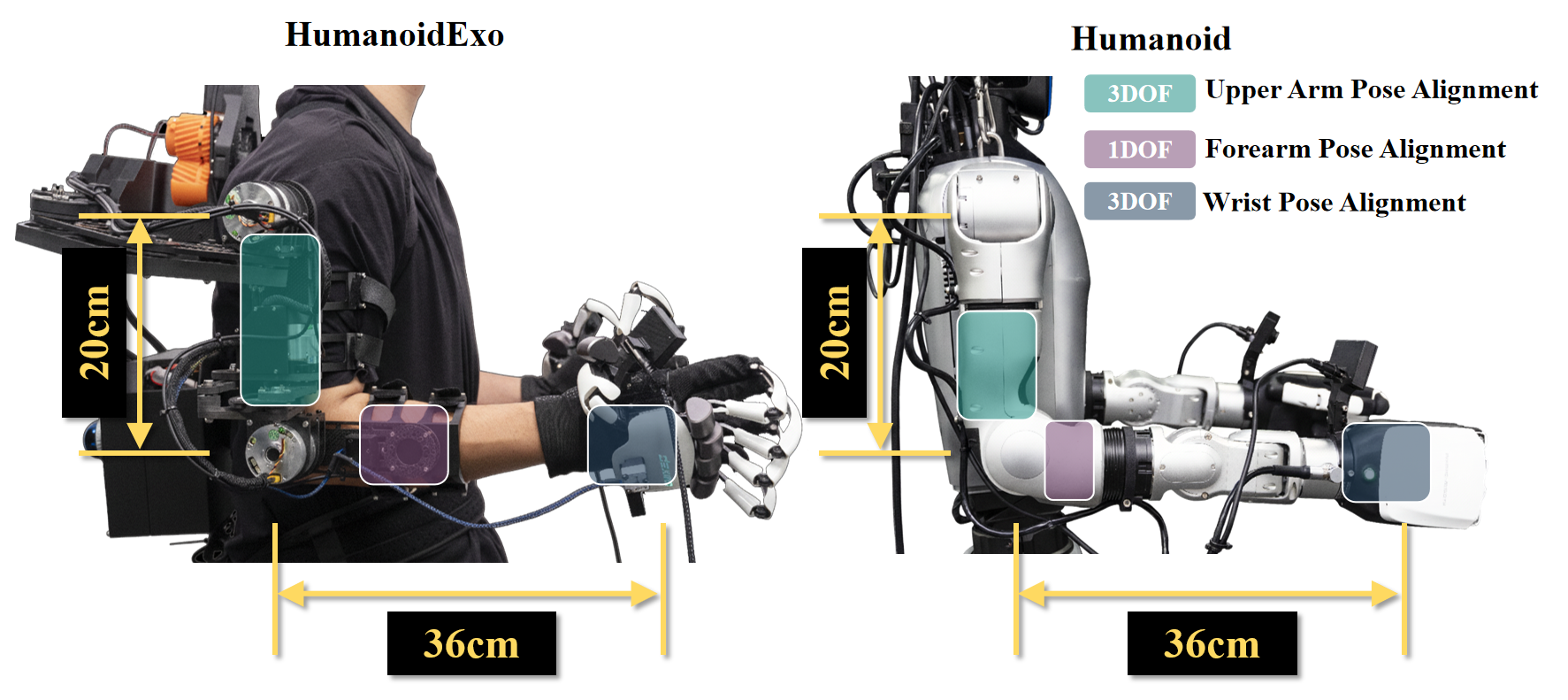

HumanoidExo is specifically designed to read all seven joints of the human arm. The rotational axes of its exoskeleton arm are precisely aligned with the corresponding axes of the human joints, making the exoskeleton isomorphic to the human arm. Since the HumanoidExo system adopts a joint space control method with angle remapping, we redesigned the exoskeleton's key parameters to match the arm length of the Unitree G1 robot.

Our approach, namely HumanoidExo-VLA (HE-VLA in short), consists of two key components: a pre-trained Vision- Language-Action (VLA) model that learns foundational whole-body motion control, and a reinforcement learning method that ensures robust whole-body balance. To tackle the challenge of manipulating complex humanoid skills, we leverage DexVLA, a pre-trained vision-language-action model, for the tasks described in our experiments.

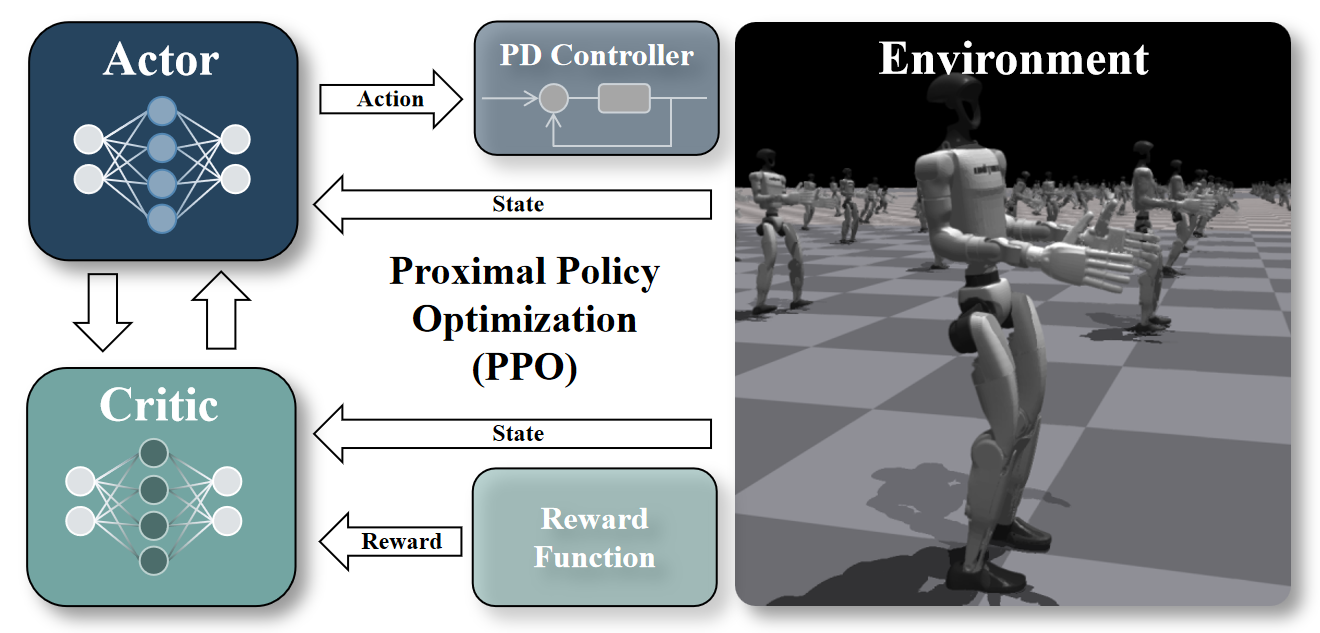

Relying solely on imitation learning to directly output joint positions for whole-body control introduces significant stability risks. Minor deviations from the learned trajectories can result in falls, posing a threat of catastrophic damage to the robot and its environment. To overcome this limitation, we leverage reinforcement learning to train a robust whole-body loco- manipulation controller. This controller is responsible for maintaining dynamic balance while executing commands for base speed, yaw rate, and a target torso height.

This is a tabletop manipulation task. The robot is required to pick up a toy, whose position is randomized on its left or right side, and place it into a tray at the center. In the following videos, the HE-VLA model was trained with 5 teleoperated data + 195 HumanoidExo data.

We designed this experiment that builds upon the previous tabletop manipulation setup. We collected 195 new HumanoidExo demonstrations of a compound task: walking to the table, stopping, and then executing the 'Place Toy' action. The policy was then trained on a mixed dataset containing these 195 new HumanoidExo demonstrations and the same 5 teleoperated demonstrations from the previous experiment. Crucially, these five teleoperated demonstrations only contain the stationary manipulation portion of the task; they include no walking. Therefore, any walking ability exhibited by the final policy must be learned exclusively from the HumanoidExo data.

We chose the 'Place Laundry' task, where the robot is required to squat, grasp clothes from a basket, and place them into a washing machine on its right. The robot repeats this process until the basket is empty, then stands up to signal task completion. This task presents several challenges: the clothes are deformable objects that are difficult for dexterous hands to grasp and place entirely inside the machine, which requires the model to exhibit recovery behaviors. Furthermore, the model must use robust visual observation while maintaining whole-body balance to avoid falling during the upper-body task execution. Following the methodology of the previous section, in the following videos, the HE-VLA model was trained with 5 teleoperated data + 195 HumanoidExo data.

Beyond the manipulation tasks, we also sought to explore the role of HumanoidExo in robot navigation skill learning. We selected an outdoor environment for data collection, with the following defined task: the robot was required to continue walking on a blue track until it exited the track and then stop. All training data was sourced exclusively from HumanoidExo, with no teleoperated data used. In our experiment, data collected entirely by HumanoidExo was successfully able to achieve simple autonomous navigation for the robot.

In this work, we addressed the critical data bottleneck that hinders the development of capable, general-purpose humanoid robots. While existing methods like simulation, human videos, and direct teleoperation have advanced the field, they suffer from significant limitations in scalability, cost, and embodiment mismatch. We introduced HumanoidExo, a lightweight, wearable exoskeleton system designed to provide a practical and effective solution for scalable, whole-body data collection. Our experiments confirm that this approach is highly effective. We've shown that data from HumanoidExo enables policies to generalize to new environments, achieve remarkable data efficiency by learning complex skills from as few as five real-robot demonstrations, and even acquire entirely new skills like walking without any prior robot data. These results validate our system as a powerful paradigm for generating large-scale, high-quality humanoid datasets.

@article{zhong2025humanoidexo,

title={HumanoidExo: Scalable Whole-Body Humanoid Manipulation via Wearable Exoskeleton},

author={Rui Zhong and Yizhe Sun and Junjie Wen and Jinming Li and Chuang Cheng and Wei Dai and Zhiwen Zeng and Huimin Lu and Yichen Zhu and Yi Xu},

journal={arXiv preprint arXiv:2510.03022},

year={2025}

}